Google's recent introduction of Whisk has garnered significant attention. As a cutting-edge image generation AI, Whisk promises to revolutionize the way we create and interact with visual content. In this blog post, we will explore its potential architecture, and demonstrate how you can emulate its capabilities using various open-source projects available on GitHub. Whether you're an AI enthusiast, developer, or content creator, this guide will equip you with the knowledge to harness the power of multimodal AI models effectively.

Understanding the Architecture of Whisk

While Google has not open-sourced Whisk, its innovative approach provides a blueprint for developing similar functionalities using available open-source tools. Whisk's end-to-end architecture seamlessly integrates scene and object recognition with sophisticated image generation techniques, making it a versatile tool for various applications.

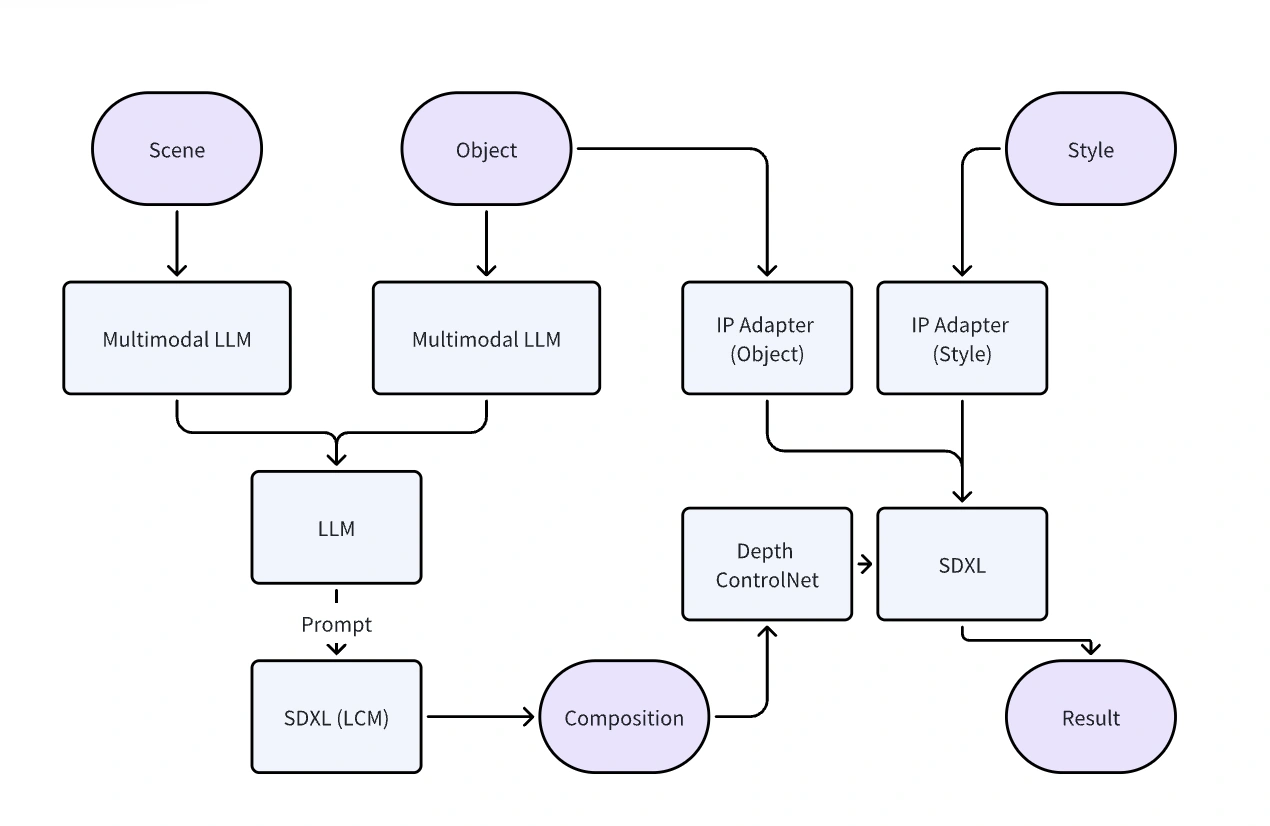

At its core, Whisk is likely an end-to-end multimodal large model that processes multiple types of data inputs to generate coherent and contextually relevant images. Here's a breakdown of the presumed workflow:

- Input Processing: Users provide multiple images depicting scenes and objects. These images are fed into a multimodal model that extracts detailed descriptions of the scene and individual objects.

- Scene and Object Description: The multimodal model generates comprehensive descriptions of the provided images, capturing essential elements such as lighting, perspective, and object attributes.

- Composition Planning: Leveraging a Large Language Model (LLM like GPT-4o), Whisk imagines a plausible and aesthetically pleasing composition based on the descriptions. This step ensures that the generated image maintains logical and visual coherence.

- Depth Map Generation: Using SDXL in conjunction with LCM (Latent Consistency Model) technology, Whisk rapidly generates a depth map. This depth map serves as a foundational guide for the final image composition, focusing on the spatial arrangement rather than image quality.

- Style and Object Embedding: The final step involves embedding specific styles and incorporating objects (such as characters or animals) into the image generation process. This ensures that the generated image aligns with the desired artistic direction and content requirements.

- Final Image Generation: The depth map acts as a conditional control, guiding the AI to produce a high-quality, contextually accurate image that adheres to the planned composition and embedded styles.

Replicating Whisk with ComfyUI and GitHub

While Whisk itself isn't open source, you can achieve similar results by leveraging open-source projects available on GitHub and integrating them with ComfyUI, a versatile user interface for managing AI workflows. Here's a step-by-step guide to creating a Whisk-like image generation pipeline:

- Scene and Object Analysis, Start by utilizing open-source multimodal models that can process images and generate descriptive metadata. Projects like CLIP or GPT-4o by OpenAI can be instrumental in understanding and describing image content effectively.

- Composition Planning with LLMs, Integrate a powerful LLM such as GPT-4 to interpret the scene and object descriptions. This model will help in conceptualizing a coherent and visually appealing composition based on the provided inputs.

- Generating Depth Maps with SDXL and LCM, Leverage SDXL along with LCM to swiftly create depth maps. These maps are crucial for establishing the spatial framework of your final image, ensuring that elements are proportionally and logically placed.

- Embedding Styles and Objects, Incorporate specific styles and objects into your generation process using open-source libraries such as InstantStyle and IP-Adapter. These tools allow for the embedding of artistic styles and the integration of various objects, enhancing the richness and diversity of the generated images.

- Final Image Generation, Use the depth maps as conditional controls within your image generation pipeline. Tools like ComfyUI can help orchestrate this process, ensuring that the final output aligns with your initial composition and style requirements. By managing these conditions effectively, you can produce high-quality images that mirror the sophistication of Google's Whisk.

Google's Whisk represents a significant advancement in image generation AI, offering seamless integration of multimodal data and sophisticated generation techniques. While Whisk itself remains proprietary, the open-source ecosystem provides ample tools and frameworks to replicate its functionality. By leveraging ComfyUI and exploring relevant projects on GitHub, you can build a comprehensive image generation system tailored to your specific needs.

Looking ahead, we hope that members of the AI community will develop a ComfyUI version of Whisk, further democratizing access to this powerful tool. Projects like aoGen are already making strides in this direction, so stay tuned for exciting developments.