According to recent reports, the DeepSeek team has taken an unusual path. Their initially low-cost trained R1 model had already shocked Silicon Valley and Wall Street. Now, even more surprisingly, it's revealed that they built this super AI without needing CUDA. Foreign media reported that in just two months, they trained a 671 billion parameter MoE language model on a cluster of 2048 H800 GPUs, achieving 10 times higher efficiency than top AI models. Most shockingly, this breakthrough wasn't achieved using CUDA, but through extensive fine-grained optimizations and NVIDIA's assembly-like PTX (Parallel Thread Execution) programming.

This news once again stirred up the AI community, with netizens expressing shock at their strategy: "If there's any group of people in the world crazy enough to say 'CUDA is too slow! Let's just write PTX directly!' it would definitely be those former quantitative traders."

Others suggested: what would it mean if DeepSeek open-sources their CUDA alternative?

Genius geeks fine-tuning PTX to maximize GPU performance

NVIDIA's PTX (Parallel Thread Execution) is an intermediate instruction set architecture specifically designed for their GPUs, positioned between high-level GPU programming languages (such as CUDA C/C++ or other language frontends) and low-level machine code (Stream Assembly or SASS).

PTX is a low-level instruction set architecture that presents the GPU as a data-parallel computing device, enabling fine-grained optimizations such as register allocation and thread/warp-level adjustments that are not possible with languages like CUDA C/C++. When PTX is converted to SASS, it is optimized for specific NVIDIA GPU generations.

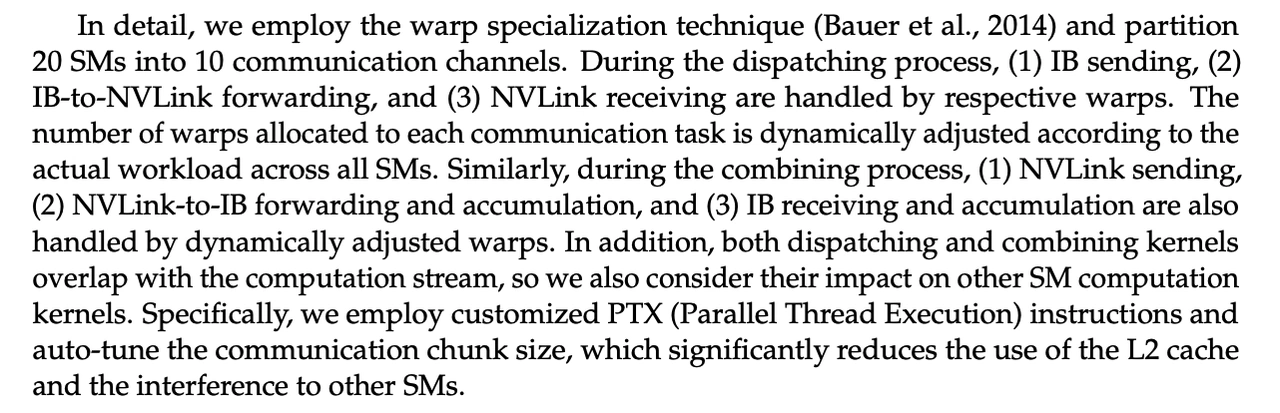

During the training of the V3 model, DeepSeek reconfigured the NVIDIA H800 GPU:

“Out of 132 streaming multiprocessors, 20 were allocated for inter-server communication, primarily for data compression and decompression, to overcome processor connectivity limitations and improve transaction processing speed.”

To maximize performance, DeepSeek also implemented advanced pipelining algorithms through additional fine-grained thread/warp-level adjustments. These optimizations far exceed conventional CUDA development levels but are extremely difficult to maintain. However, this level of optimization perfectly demonstrates the exceptional technical capabilities of the DeepSeek team.

Under the dual pressures of global GPU shortages and U.S. restrictions, companies like DeepSeek had to seek innovative solutions. Fortunately, they achieved significant breakthroughs in this area.

Some developers believe that "low-level GPU programming is the right direction. The more optimization you achieve, the more you can reduce costs or increase the performance budget available for other improvements without extra cost."

This breakthrough has caused significant market impact, with some investors believing that the demand for high-performance hardware will decrease for new models, potentially affecting the sales performance of companies like NVIDIA.

However, industry veterans, including former Intel chief Pat Gelsinger, believe that AI applications can fully utilize all available computing power. Regarding DeepSeek's breakthrough, Gelsinger sees it as a new approach to embedding AI capabilities in various low-cost devices in the mass market.

Is CUDA's Competitive Moat Disappearing?

Does the emergence of DeepSeek suggest that developing cutting-edge LLMs no longer requires massive GPU clusters? Will the enormous investments in computational resources by Google, OpenAI, Meta, and xAI ultimately go to waste? The general consensus among AI developers suggests otherwise.

However, it's certain that there is still enormous potential for optimization in data processing and algorithms, and more innovative optimization methods will inevitably emerge in the future.

With the open-source release of DeepSeek's V3 model, detailed technical information has been disclosed in their technical report.The report documents DeepSeek's deep-level optimizations. In simple terms, their optimization level can be summarized as "they rebuilt the entire system from the ground up."

As mentioned above, when training V3 on H800 GPUs, DeepSeek customized the GPU's core computing units (Streaming Multiprocessors, or SMs) to meet specific requirements.Out of all 132 SMs, they specifically allocated 20 for handling inter-server communication tasks rather than computation.

This customization work was done at the PTX (Parallel Thread Execution) level, which is NVIDIA GPU's low-level instruction set.PTX operates at a level close to assembly language, enabling fine-grained optimizations such as register allocation and thread/warp-level adjustments. However, this level of precise control is both complex and difficult to maintain. This is why developers typically choose to use high-level programming languages like CUDA, as they provide sufficient performance optimization for most parallel programming tasks without requiring low-level optimization.However, when there's a need to push GPU resource efficiency to its limits and achieve special optimization requirements, developers must resort to PTX.

However, technical barriers still remain!

Regarding Deepseek's technical breakthrough, Dr. Ian Cutress states: "Deepseek's use of PTX does not eliminate CUDA's technical barriers."

CUDA is a high-level language. It simplifies codebase development and interfacing with NVIDIA GPUs while supporting rapid iterative development. CUDA can optimize performance through fine-tuning underlying code (PTX), and its fundamental libraries are already well-established. Currently, the vast majority of production-grade software is built on CUDA. PTX is more like a directly comprehensible GPU assembly language. It operates at a low level, allowing for micro-level optimizations.

Choosing to program in PTX means losing access to all those pre-built CUDA libraries mentioned above. This is an extremely tedious task requiring deep professional knowledge of hardware and runtime issues. However, if developers fully understand what they're doing, they can indeed achieve better runtime performance and optimization effects.

Currently, CUDA remains the mainstream choice in the NVIDIA ecosystem. Developers seeking to extract an additional 10-20% performance or power efficiency from their computational workloads, such as companies deploying models in the cloud and selling token services, have indeed deepened their optimization from CUDA to the PTX level. They're willing to invest the time because it pays off in the long run.

It's important to note that PTX is typically optimized for specific hardware models and is difficult to port between different hardware without specifically written adaptation logic. Furthermore, manually tuning computational kernels requires tremendous patience, courage, and the special ability to stay calm, as memory access errors might occur once every 5000 cycles.

Of course, we fully understand and respect those who genuinely need to use PTX and developers who are adequately compensated to handle these issues. For other developers, continuing to use CUDA or other CUDA-based high-level variants (or MLIR) remains the wise choice.

Experience AI's Limitless Potential with aoGen

In today's world where AI technology continues to make breakthrough progress, websites like aoGen can fulfill commercial requirements such as Generating AI Fashion Models, AI Image Enhancer, AI Photo Edit etc. Users who are interested are welcome to visit and explore these capabilities.